Han Tianshun, a second-year PhD student from the School of Computer Science and Engineering, Faculty of Innovative Engineering, Macau University of Science and Technology, published a paper titled "PESTalk: Speech-Driven 3D Facial Animation with Personalized Emotional Styles" as the first author at the prestigious ACM International Conference on Multimedia (ACM MM). The corresponding author of the paper is Wan Jun, a distinguished Associate Professor of the School of Computer Science and Engineering.

PhD student Han Tianshun

ACM MM, organized by the Association for Computing Machinery (ACM), is one of the most influential and highest-level international conferences in the field of multimedia. Since its inception in 1993, it has become a core platform for academic and industrial exchange in this field. It is recommended by the China Computer Federation (CCF) as an A-level international conference in computer graphics and multimedia. The competition for ACM MM 2025 was exceptionally fierce, with 4,711 valid submissions received worldwide. After a rigorous double-blind review process, only 1,251 papers were accepted, resulting in an acceptance rate of approximately 26.6%. This achievement further highlights Macau University of Science and Technology’s outstanding research capabilities in the field of artificial intelligence.

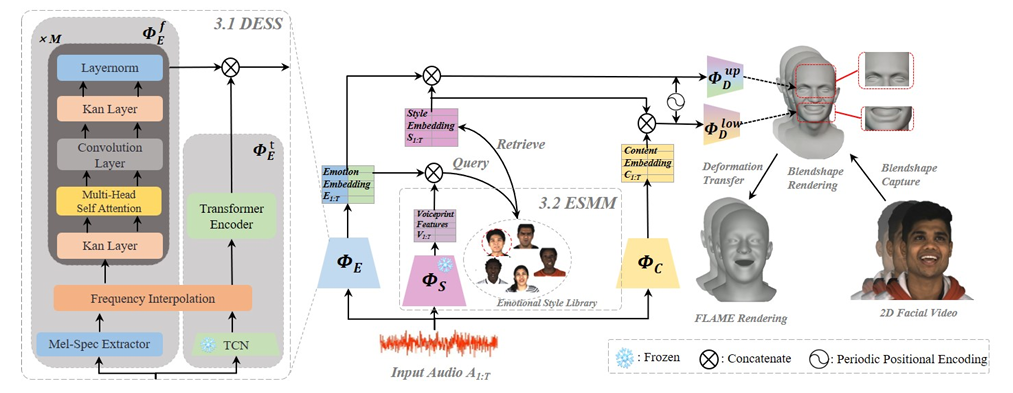

The research findings focus on the speech-driven 3D facial animation synthesis technology and proposes a method called PESTalk for generating 3D facial animations with personalized emotional styles. Specifically, the method mainly consists of a Dual-Stream Sentiment Extractor (DSEE) and an Emotional Style Modeling Module (ESMM). The former employs a dual strategy that captures both temporal changes and frequency domain features, extracting fine-grained emotional features and subtle emotional differences simultaneously when processing audio signals, thereby addressing the challenge of distinguishing similar emotional categories. The latter establishes a baseline representation for each subject based on voiceprint features and continuously integrates emotional features for progressive optimization, enabling each subject to form a unique personalized emotional style representation across different emotional categories and avoiding repetitive and stereotyped emotional expressions. Additionally, the team utilizes an advanced facial capture model to extract pseudo facial blendshape coefficients from 2D emotional data, constructing a large-scale 3D emotional speaker face dataset (3D-EmoStyle). This not only enhances the realism of 3D facial animations but also alleviates the problem of related data scarcity.

▲ Overall flowchart of the proposed method in the paper

This achievement holds significant value for research on voice-driven 3D facial animation. By enhancing the emotional authenticity and personalized expression of animations, it facilitates the creation of natural and vivid facial animation effects in scenarios such as virtual interactions, digital human technology, and film production, offering broad practical application prospects. Through theoretical innovation and experimental validation, the paper demonstrates Macau University of Science and Technology’s leading position in the fields of computer vision and artificial intelligence. As the first author of the paper, Han Tianshun’s published research also reflects the university’s outstanding accomplishments in cultivating high-level research talent.

Macau University of Science and Technology will continue to support cutting-edge scientific research, promote innovative development in the field of artificial intelligence, and contribute more wisdom and strength to global technological progress.